FFmpeg 简易播放器

1.FFmpeg 和 SDL 下载

可以直接去官网下载源码自己编译或者下载已经编译好的版本

比如我直接用

brew install ffmpeg –with-sdl2

之后 /usr/local/Cellar 下就会有 ffmpeg 和 sdl2 了

2.开发环境

这里使用的是 MacOS + CLion

随便新建一个空工程就好,写好 CMakeLists 就好

2.1 CMakeLists 学习

-

include_directories()

Add the given directories to those the compiler uses to search for include files. Relative paths are interpreted as relative to the current source directory.

头文件搜索路径

-

link_directories()

Add directories in which the linker will look for libraries.

链接库搜索路径

-

target_link_libraries()

Specify libraries or flags to use when linking a given target and/or its dependents

指定链接时需要的链接库

-

add_executable()

Add an executable to the project using the specified source files.

通过指定的代码文件生成一个可执行文件

2.2 CMakeLists 关键部分

include_directories(/usr/local/Cellar/ffmpeg/4.2.2_3/include/ /usr/local/Cellar/sdl2/2.0.12_1/include/)

link_directories(/usr/local/Cellar/ffmpeg/4.2.2_3/lib/ /usr/local/Cellar/sdl2/2.0.12_1/lib/)

add_executable(simple_player simple_player.c)

target_link_libraries(

simple_player

avcodec

avdevice

avfilter

avformat

avresample

avutil

postproc

swresample

swscale

sdl2

)

2.3 设置参数

播放器接受一个文件路径作为参数,可以命令行启动或者设置 Run Configurations

3.FFmpeg 和 SDL 的几个函数

只是拎出来几个用到了的,具体参数含义自行阅读 API 文档

3.1 FFmpeg

-

3.1.1 avformat_open_input(AVFormatContext **ps, const char *url, ff_const59 AVInputFormat *fmt, AVDictionary **options);

Open an input stream and read the header. The codecs are not opened. The stream must be closed with avformat_close_input().

就是用来读取音频文件的信息的

avformat_close_input 同理

-

3.1.2 av_dump_format(AVFormatContext *ic, int index, const char *url, int is_output);

打印输入、输出流的各种信息,如码率等 -

3.1.3 vcodec_alloc_context3(const AVCodec *codec);

申请 AVCodecContext 空间。需要传递一个编码器,也可以不传,但不会包含编码器。如果不传,那么后面 avcodec_open2() 则需要传递一个 -

3.1.4 avcodec_parameters_to_context(AVCodecContext *codec, const AVCodecParameters *par);

该函数用于将流里面的参数,也就是 AVStream 里面的参数直接复制到 AVCodecContext 的上下文当中 -

3.1.5 avcodec_find_decoder(enum AVCodecID id);

寻找解码器 -

3.1.6 avcodec_open2(AVCodecContext *avctx, const AVCodec *codec, AVDictionary **options);

该函数用于初始化一个视音频编解码器的 AVCodecContext -

3.1.7 sws_getContext(int srcW, int srcH, enum AVPixelFormat srcFormat, int dstW, int dstH, enum AVPixelFormat dstFormat, int flags, SwsFilter *srcFilter, SwsFilter *dstFilter, const double *param);

Allocate and return an SwsContext. You need it to perform scaling/conversion operations using sws_scale().

图像处理的 context

-

3.1.8 av_frame_alloc()

分配一个 AVFrame 的内存空间 -

3.1.9 av_image_get_buffer_size(enum AVPixelFormat pix_fmt, int width, int height, int align);

此函数的功能是按照指定的宽、高、像素格式来分析图像内存 -

3.1.10 av_image_fill_arrays(uint8_t *dst_data[4], int dst_linesize[4], const uint8_t *src, enum AVPixelFormat pix_fmt, int width, int height, int align);

将 buffer 和 AVFrame 关联 -

3.1.11 av_read_frame(AVFormatContext *s, AVPacket *pkt);

读取码流中的音频若干帧或者视频一帧 -

3.1.12 avcodec_send_packet(AVCodecContext *avctx, const AVPacket *avpkt);

将码流中的数据放入队列中 -

3.1.13 avcodec_receive_frame(AVCodecContext *avctx, AVFrame *frame);

获取解码的输出数据

3.2 SDL

- 3.2.1 初始化

- SDL_Init(): 初始化SDL

- SDL_CreateWindow(): 创建窗口(Window)

- SDL_CreateRenderer(): 基于窗口创建渲染器(Render)

- SDL_CreateTexture(): 创建纹理(Texture)

- 3.2.2 循环渲染数据

- SDL_UpdateTexture(): 设置纹理的数据

- SDL_RenderCopy(): 纹理复制给渲染器

- SDL_RenderPresent(): 显示

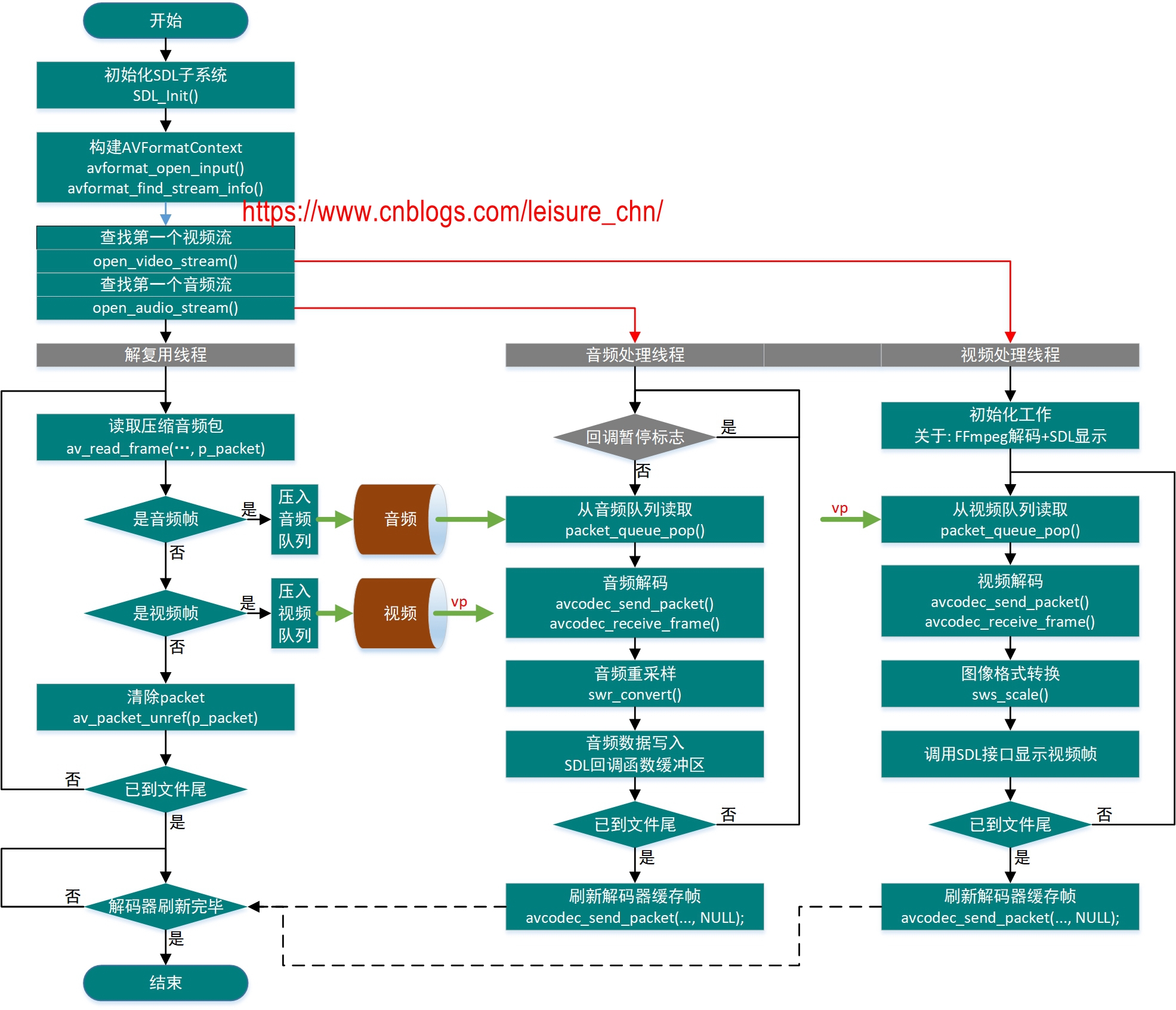

4.一个完整的播放器流程

这里是一个完善的流程图(借鉴来的),但是我们只需要一个简易的播放器,省略音频部分和音视频同步的部分

5.简易播放器源码

我一开始入门的目的,就是想看看怎么能写出一个播放器,大家入门肯定都跟我一样,这里放一个没有声音的简易版本播放器,网上也能搜到很多类似的代码

#include <libavformat/avformat.h>

#include <libavcodec/avcodec.h>

#include <libswscale/swscale.h>

#include <libavutil/imgutils.h>

#include <SDL2/SDL.h>

#include <SDL2/SDL_thread.h>

int main(int argc, char *argv[]) {

if (argc < 2) {

return -1;

}

int ret = -1, i = -1, v_stream_idx = -1;

char *vf_path = argv[1];

AVFormatContext *fmt_ctx = NULL;

AVCodecContext *codec_ctx = NULL;

AVCodec *codec;

AVFrame *frame;

AVPacket packet;

ret = avformat_open_input(&fmt_ctx, vf_path, NULL, NULL);

if (ret < 0) {

printf("Open video file %s failed \n", vf_path);

goto end;

}

if (avformat_find_stream_info(fmt_ctx, NULL) < 0)

goto end;

av_dump_format(fmt_ctx, 0, vf_path, 0);

for (i = 0; i < fmt_ctx->nb_streams; i++) {

if (fmt_ctx->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO) {

v_stream_idx = i;

break;

}

}

if (v_stream_idx == -1) {

printf("Cannot find video stream\n");

goto end;

}

codec_ctx = avcodec_alloc_context3(NULL);

avcodec_parameters_to_context(codec_ctx, fmt_ctx->streams[v_stream_idx]->codecpar);

codec = avcodec_find_decoder(codec_ctx->codec_id);

if (codec == NULL) {

printf("Unsupported codec for video file\n");

goto end;

}

if (avcodec_open2(codec_ctx, codec, NULL) < 0) {

printf("Can not open codec\n");

goto end;

}

if (SDL_Init(SDL_INIT_VIDEO | SDL_INIT_AUDIO | SDL_INIT_TIMER)) {

printf("Could not init SDL due to %s", SDL_GetError());

goto end;

}

SDL_Window *window;

SDL_Renderer *renderer;

SDL_Texture *texture;

SDL_Event event;

SDL_Rect r;

window = SDL_CreateWindow("SDL_CreateTexture", SDL_WINDOWPOS_UNDEFINED,

SDL_WINDOWPOS_UNDEFINED, codec_ctx->width, codec_ctx->height,

SDL_WINDOW_RESIZABLE);

r.x = 0;

r.y = 0;

r.w = codec_ctx->width;

r.h = codec_ctx->height;

renderer = SDL_CreateRenderer(window, -1, 0);

texture = SDL_CreateTexture(renderer, SDL_PIXELFORMAT_YV12, SDL_TEXTUREACCESS_STREAMING,

codec_ctx->width, codec_ctx->height);

struct SwsContext *sws_ctx = NULL;

sws_ctx = sws_getContext(codec_ctx->width, codec_ctx->height, codec_ctx->pix_fmt,

codec_ctx->width, codec_ctx->height, AV_PIX_FMT_YUV420P, SWS_BILINEAR, NULL, NULL, NULL);

frame = av_frame_alloc();

int ret1, ret2;

AVFrame *pict;

pict = av_frame_alloc();

int numBytes;

uint8_t *buffer = NULL;

numBytes = av_image_get_buffer_size(AV_PIX_FMT_YUV420P, codec_ctx->width, codec_ctx->height, 1);

buffer = (uint8_t *) av_malloc(numBytes * sizeof(uint8_t));

// required, or bad dst image pointers

av_image_fill_arrays(pict->data, pict->linesize, buffer, AV_PIX_FMT_YUV420P, codec_ctx->width, codec_ctx->height,

1);

while (1) {

SDL_PollEvent(&event);

if (event.type == SDL_QUIT)

break;

ret = av_read_frame(fmt_ctx, &packet);

if (ret < 0) {

continue;

}

if (packet.stream_index == v_stream_idx) {

ret1 = avcodec_send_packet(codec_ctx, &packet);

if (ret1 < 0) {

continue;

}

ret2 = avcodec_receive_frame(codec_ctx, frame);

if (ret2 < 0) {

continue;

}

sws_scale(sws_ctx, (uint8_t const *const *) frame->data,

frame->linesize, 0, codec_ctx->height,

pict->data, pict->linesize);

SDL_UpdateYUVTexture(texture, &r, pict->data[0], pict->linesize[0],

pict->data[1], pict->linesize[1],

pict->data[2], pict->linesize[2]);

SDL_RenderClear(renderer);

SDL_RenderCopy(renderer, texture, NULL, NULL);

SDL_RenderPresent(renderer);

SDL_Delay(16);

}

av_packet_unref(&packet);

}

SDL_DestroyRenderer(renderer);

SDL_Quit();

av_frame_free(&frame);

avcodec_close(codec_ctx);

avcodec_free_context(&codec_ctx);

end:

avformat_close_input(&fmt_ctx);

printf("Shutdown\n");

return 0;

}

6.参考资料

[1] 雷霄骅 https://blog.csdn.net/leixiaohua1020/

[2] https://www.cnblogs.com/yongdaimi/p/9804699.html

[3] https://www.cnblogs.com/leisure_chn/p/10235926.html